Last week I held a Kubernetes Quickstart Workshop as part of the TOHacks 2022 Hype Week.

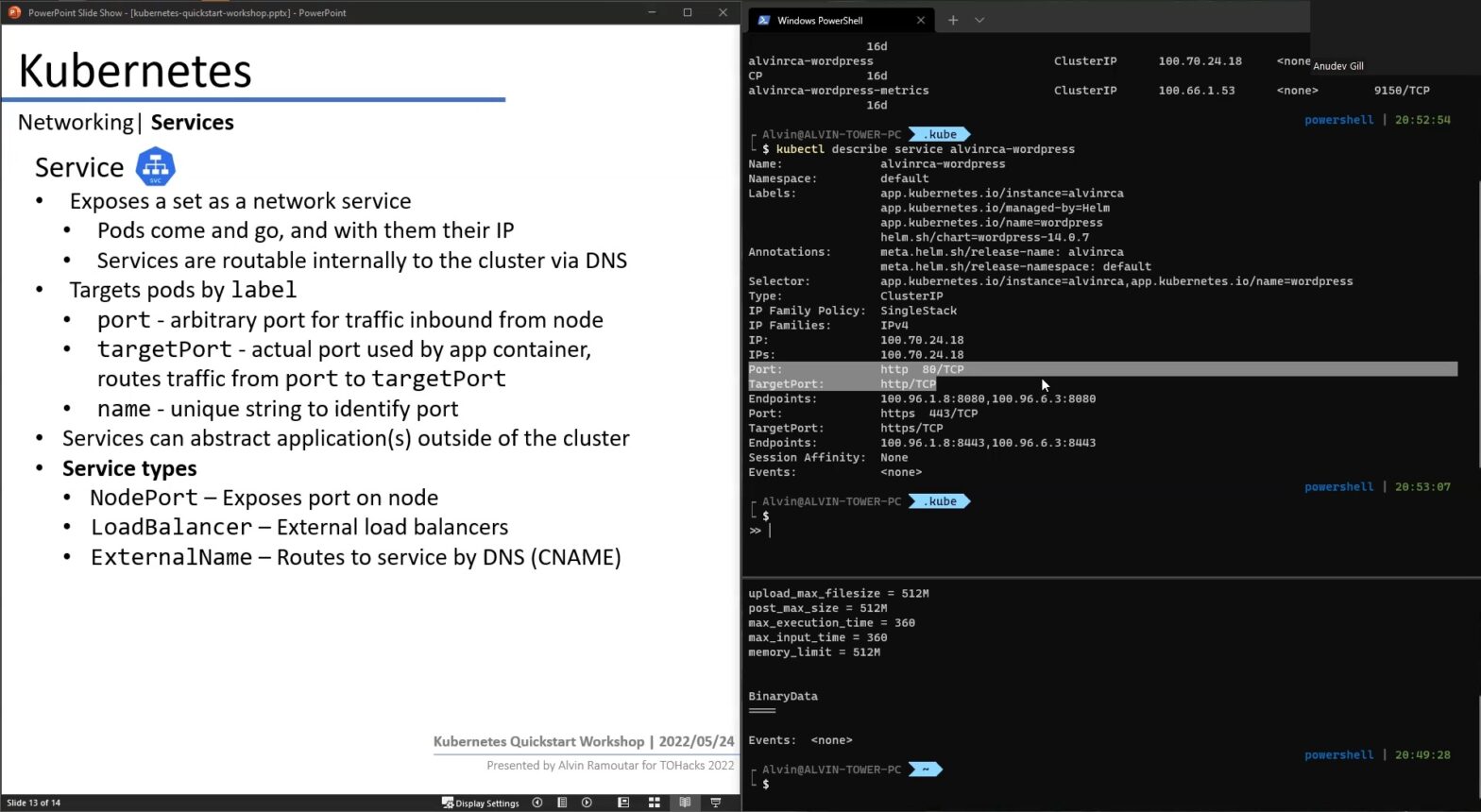

Together, we went over some basic Kubernetes concepts, API resources, and the problems they solved relative to non-cloud native architecture.

As part of this workshop I created some simple K8s cheat-sheets/material, along with a whole new Kubernetes cluster which I exposed as part of a hands-on lab.

Participants, which included high school/post-secondary students, found the workshop pretty cool. I’ve heard feedback post-workshop that their friends thought they were hacking as they used kubectl.

Kids, kubectl responsibly.

I also noted feedback that more detail in certain topics would’ve been great, which I would’ve definitely wanted. I barely managed to get through everything I wanted to in 60 minutes 🙁

The primary goal was to explore common questions/problems one hosting an application may encounter, and how Kubernetes can address them.

All in all, it was a great experience that I look forward to doing more often – not particular to Kubernetes, but cloud native architecture in general. As a cloud engineer, I’ve learned the hard way that this is anything but straight-forward, and I hope others can learn from my struggles as they embark on their journeys in this space.

Workshop aside, I wanted to chat a little about the lab environment I created since I got questions post-lab from other Kubernetes aficionados.

I’ve been running Tanzu Community Edition on my home lab since its early days, both as a passion project having contributed to it, and because it integrates well with my home vSphere lab. As such, I already had a management cluster serving as my ‘cluster operator’, which I use to create/destroy clusters on the fly. This was no different, with the exception of creating a user fit for this public lab.

How I created a Kubernetes Lab in 15 minutes using Tanzu Community Edition

I should probably preface this by saying that this is NOT something I would encourage for any kind of persistent environment.

While this might be less dangerous if it was behind a VPN, the cluster we’re about to create has the risk of leaking important vSphere configuration data, along with the credentials of the user that cluster resources will be created under. For example, if a user can fetch vsphere-config-secret from kube-system namespace, consider your vSphere environment compromised.

This process assumes the following:

- You have an existing management cluster created

- Your Tanzu cluster config

VSPHERE_CONTROL_PLANE_ENDPOINTresolves to some internal IP that’ll be used for your workload cluster API, and resolves to your public IP externally - You can port-forward your cluster API (probably better ways, will talk about this later)

Deployment

Step 1. Perform the following command using your cluster config on your Tanzu bootstrap host.

Will also be the longest step depending on your hardware.

tanzu cluster create open --file ./open.k8s.alvinr.ca -v 9TOHacks Tanzu workload cluster config

AVI_CA_DATA_B64: ""

AVI_CLOUD_NAME: ""

AVI_CONTROL_PLANE_HA_PROVIDER: ""

AVI_CONTROLLER: ""

AVI_DATA_NETWORK: ""

AVI_DATA_NETWORK_CIDR: ""

AVI_ENABLE: "false"

AVI_LABELS: ""

AVI_MANAGEMENT_CLUSTER_VIP_NETWORK_CIDR: ""

AVI_MANAGEMENT_CLUSTER_VIP_NETWORK_NAME: ""

AVI_PASSWORD: ""

AVI_SERVICE_ENGINE_GROUP: ""

AVI_USERNAME: ""

CLUSTER_CIDR: 100.96.0.0/11

CLUSTER_NAME: open

CLUSTER_PLAN: dev

CONTROL_PLANE_MACHINE_COUNT: "3"

ENABLE_AUDIT_LOGGING: "false"

ENABLE_CEIP_PARTICIPATION: "false"

ENABLE_MHC: "true"

IDENTITY_MANAGEMENT_TYPE: none

INFRASTRUCTURE_PROVIDER: vsphere

LDAP_BIND_DN: ""

LDAP_BIND_PASSWORD: ""

LDAP_GROUP_SEARCH_BASE_DN: ""

LDAP_GROUP_SEARCH_FILTER: ""

LDAP_GROUP_SEARCH_GROUP_ATTRIBUTE: ""

LDAP_GROUP_SEARCH_NAME_ATTRIBUTE: cn

LDAP_GROUP_SEARCH_USER_ATTRIBUTE: DN

LDAP_HOST: ""

LDAP_ROOT_CA_DATA_B64: ""

LDAP_USER_SEARCH_BASE_DN: ""

LDAP_USER_SEARCH_FILTER: ""

LDAP_USER_SEARCH_NAME_ATTRIBUTE: ""

LDAP_USER_SEARCH_USERNAME: userPrincipalName

OIDC_IDENTITY_PROVIDER_CLIENT_ID: ""

OIDC_IDENTITY_PROVIDER_CLIENT_SECRET: ""

OIDC_IDENTITY_PROVIDER_GROUPS_CLAIM: ""

OIDC_IDENTITY_PROVIDER_ISSUER_URL: ""

OIDC_IDENTITY_PROVIDER_NAME: ""

OIDC_IDENTITY_PROVIDER_SCOPES: ""

OIDC_IDENTITY_PROVIDER_USERNAME_CLAIM: ""

OS_ARCH: amd64

OS_NAME: photon

OS_VERSION: "3"

SERVICE_CIDR: 100.64.0.0/13

TKG_HTTP_PROXY_ENABLED: "false"

VSPHERE_CONTROL_PLANE_DISK_GIB: "40"

VSPHERE_CONTROL_PLANE_ENDPOINT: k8s.alvinr.ca

VSPHERE_CONTROL_PLANE_MEM_MIB: "16384"

VSPHERE_CONTROL_PLANE_NUM_CPUS: "4"

VSPHERE_DATACENTER: <>

VSPHERE_DATASTORE: <>

VSPHERE_FOLDER: <>

VSPHERE_NETWORK: <>

VSPHERE_PASSWORD: <>

VSPHERE_RESOURCE_POOL: <>

VSPHERE_SERVER: <>

VSPHERE_SSH_AUTHORIZED_KEY: |

ssh-rsa <>

VSPHERE_TLS_THUMBPRINT: <>

VSPHERE_USERNAME: <>

VSPHERE_WORKER_DISK_GIB: "40"

VSPHERE_WORKER_MEM_MIB: "8192"

VSPHERE_WORKER_NUM_CPUS: "4"

WORKER_MACHINE_COUNT: "3"* items denoted with <> have been redacted

Step 2. Retrieve admin credential, you might want this later (plus, we’ll steal certificate authority data from this later).

tanzu cluster kubeconfig get open --admin --export-file admin-config-openStep 3. Generate key and certificate signing request (CSR).

Here, we’ll prepare to create a native K8s credential.

Pay attention to the org element, k8s.alvinr.ca, this will be our subject to rolebind later.

openssl genrsa -out tohacks.key 2048

openssl req -new -key tohacks.key -out tohacks.csr -subj "/CN=tohacks/O=tohacks/O=k8s.alvinr.ca"Step 4. Open a shell session to one of your control plane nodes.

Feel free to do so however you want, my bootstrapping host had kubectl node-shell from another project so I ended up using this.

Copy both the key and CSR into this node.

Step 5. Sign the CSR.

My lab life was for the duration of TOHacks 2022 Hype Week, so 7 days validity was enough.

openssl x509 -req -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -days 7 -in tohacks.csr -out tohacks.crtStep 6. Encode key and cert in base64, we’ll use this later to create our kubeconfig.

Copy these two back to your host.

cat tohacks.key | base64 | tr -d '\n' > tohacks.b64.key

cat tohacks.crt | base64 | tr -d '\n' > tohacks.b64.crtStep 7. Build kubeconfig manifest.

Here’s an example built for this lab, this is what I distributed to my participants.

We didn’t bother going over importing this credential to their kubeconfig path, we just provided it manually to every command via the --KUBECONFIG flag.

TOHacks kubeconfig credential

---

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: <ADMIN_KUBECONFIG_STEP_2>

server: https://k8s.alvinr.ca:6443

name: public

contexts:

- context:

cluster: public

namespace: default

user: tohacks

name: tohacks

current-context: tohacks

kind: Config

preferences: {}

users:

- name: tohacks

user:

client-certificate-data: <tohacks.b64.crt_STEP_6>

client-key-data: <tohacks.b64.key_STEP_6>Step 8. Create a Role.

Here is where we define what our lab participants can do. In this case, I created a namespace-scoped (default) granting them a pseudo reader access (they can only use get/watch/list API).

TOHacks read-only participant role

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: default

name: tohacks

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["get", "watch", "list"]Step 9. Create a RoleBinding.

Here is where we define our subject, in this case the org used in the signed cert file from the CSR generated in Step 3.

TOHacks read-only participant role binding

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tohacks

namespace: default

subjects:

- kind: Group

name: k8s.alvinr.ca

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: tohacks

apiGroup: rbac.authorization.k8s.ioStep 10. Deploy roles.

kubectl apply -f tohacks-role.yaml

kubectl apply -f tohacks-rolebinding.yamlStep 11. Expose K8s API (port 6443).

Finally, the part I disliked the most, exposing my cluster API for the host IP defined in Step 1., VSPHERE_CONTROL_PLANE_ENDPOINT.

I’m sure there’s lots of better ways to do this, another one that occurred to me was sitting it behind some reverse proxy like nginx since K8s API traffic is still standard HTTP.

Tear-down

When TOHacks 2022 concluded, the lab was swiftly sent to the void it came from.

tanzu cluster delete open